With the rapid advancements in computer vision, real-time object detection has become crucial for various applications, including surveillance and traffic control. Among the many algorithms, YOLO (You Only Look Once) stands out for its speed and efficiency. Pairing YOLO with NVIDIA DeepStream provides a robust solution for real-time video analytics. This article delves into the complexities of running YOLO on NVIDIA DeepStream, covering integration flow, optimization techniques, and practical use cases.

Introduction to YOLO and NVIDIA DeepStream

Before delving into implementation details, it’s essential to understand the components involved. YOLO is the leading real-time object detection system, making predictions in a single network pass, making it exceptionally fast for real-time applications. On the other hand, NVIDIA DeepStream is a highly optimized video analysis platform that enables scalable, high-performance, real-time AI-based video analytics at an affordable cost.

Prerequisites

Hardware: Compatible NVIDIA dGPU or Jetson.

Software: NVIDIA DeepStream SDK, YOLO model files (weights and configuration), and a development environment with the necessary dependencies.

Understanding the Workflow

The general workflow involves streaming video input via DeepStream, which then passes frames to the YOLO detector running on the GPU. The detector identifies, classifies, and processes the frames, displaying results or undergoing further processing.

Step-by-Step Integration

Preparation of the YOLO Model

- Export a YOLO model to the .onnx format for conversion by DeepStream using TensorRT, optimizing the model to leverage NVIDIA GPU architecture for faster inference times.

Configuring DeepStream

- Modify the DeepStream configuration to include the YOLO model, providing model file paths, input dimensions, and other model-specific parameters in a .config file used by DeepStream for loading and running the model.

Stream Handling

- Implement code to process input streams, which can be live video feeds or stored video files. DeepStream’s flexible architecture supports various input sources, facilitating working with video data from multiple origins.

Object Localization and Motion Analysis

- With the integrated model, DeepStream applies YOLO for object detection in video streams. Depending on the configuration, the detection process may require fine-tuning, such as setting detection thresholds.

Output Processing

- The final step involves handling the output from object detection, which may

- include delineating objects with bounding boxes, item counting, movement tracking, or issuing alerts based on specific criteria.

Industry Implementations

Trifork has successfully deployed YOLO with DeepStream integration in manufacturing, improving efficiency, safety, and quality control.

Quality Control: With YOLO and DeepStream, manufacturers like Velux achieve unprecedented accuracy in detecting errors in natural materials like wood, significantly enhancing throughput.

Flow Tracking: CPH Airport utilizes tracking detection to monitor baggage flows and minimize lost bags, enhancing baggage recovery rates and passenger satisfaction.

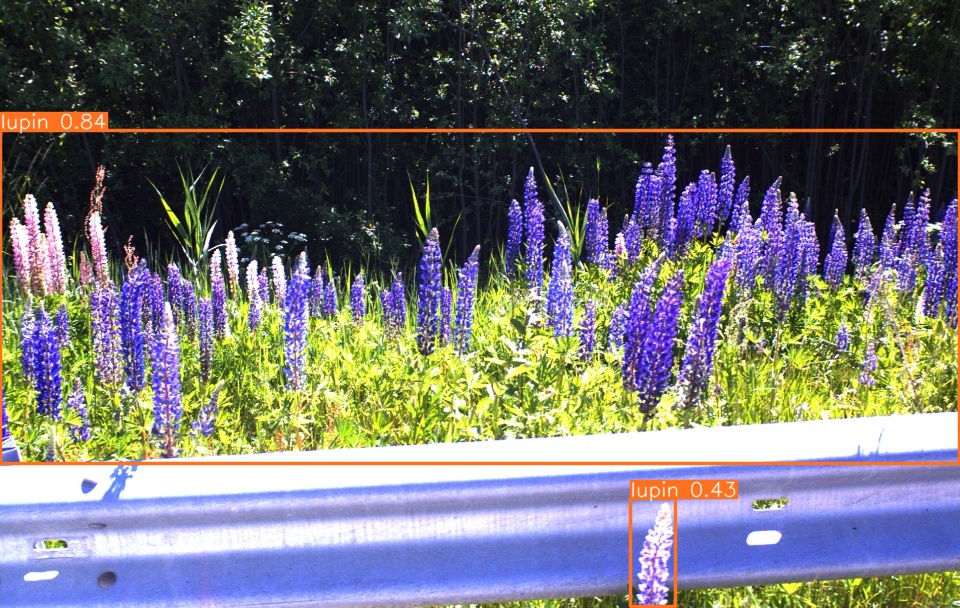

Environmental Monitoring: NVIDIA DeepStream’s YOLO implementation identifies invasive alien plant species along highway verges, enhancing biodiversity protection and ecosystem management precision.

The combination of YOLO with NVIDIA DeepStream offers unparalleled performance and accuracy in real-time object detection. Following the steps outlined in this guide, developers can leverage these technologies to integrate video analytics into their applications.

Author of the article:

Aleksandra Ludwiniak

Machine Learning Developer

Latest articles & Updates