Spatial computing holds great promise for enterprise. No longer constrained by the physical size of your computer screen, you engage with information as part of the space your are in — either real or virtual — in 3 dimensions, and with your eyes, hands and voice as input devices. People can work in completely new ways with significantly better results, especially in areas like productivity, training and service.

Apple just announced several new software capabilities — API’s — that make applying this technology to your business significantly more impactful. For example, now you can recognize objects in your environment, scan barcodes, and share your exact PoV with a remote expert.

Read on for more detail on why we think these new announcements enable important new use-cases for spatial computing, what they might mean for your business, and how you can get started.

Working in Space

Spatial computing is using a computer in a way that is seamlessly blended with the physical world around you, rather than separated from it by a screen. It removes the physical constraints from your computer, so you can have much more information, presented in a much more engaging — even experiential — way. You no longer need to rely on a mouse, touchscreen, or keyboard; you use your eyes, hands and voice to control the system.

There are three major categories of enterprise use-cases for spatial computing today:

Productivity: a portable, private workstation for knowledge workers; command-and-control for emergency response and decision making; collaborative design with lifelike 3D objects.

Imagine a business leader consuming lots of data in order to make a decision. A spatial computer allows them to not only view vast amounts of data, but do so in 3D. You can superimpose data onto a virtual object (like a map), or even walk inside a 3D representation of the data, allowing them to appreciate relationships that might not be visible on a 2D screen.

Training: experiential learning with objects, scenarios and environments that are very difficult to bring into the training room.

Imagine a doctor learning about a new procedure or medical device. They can see the correct technique demonstrated on 3D model of a patient, look at the procedure from any angle, and step through the demonstration as many times as they need to feel ready. They can do all of this anywhere, completely privately, with dramatically better learning outcomes compared to videos and textbooks.

Guided Work: step-by-step instructions for employees overlayed on real-world objects, augmented by AI, with a remote expert available to see through your eyes.

Imagine an engineer servicing a wind turbine: a spatial computer can provide step-by-step instructions overlayed on the machine itself. Contrast that, to looking at instructions on a tablet or laptop, where the user has to try and relate abstract instructions to the physical equipment. Because of this, spatial computing can speed up work, and reduce the amount of user error.

A note about terms: Augmented reality (AR), virtual reality (VR), and mixed reality (MR), are all key technical components of spatial computing. The individual words might not matter as much as the key concept here: using a computer in a way that allows it to be integrated with the space around you (either a real space, or a virtual one).

So What’s New?

Apple just announced some highly consequential additions to visionOS that enables new capabilities organizations all over the world need:

Direct access to the main camera feed: Vision Pro has a high-fidelity view of the world around the user. Now you can use of that information in your app, and process it on-device using a machine learning model. For example, you could aid the user inspecting parts on a production line, proactively highlighting potential issues.

Direct access to the users view: previously, this was only available to one application — FaceTime. Now, developers can create their own solutions that share both the apps the user has open and their camera feed with others over a network. A game changer for remote assistance.

Custom object recognition: Vision Pro is now able to recognize objects that you specify, so you can use them in your app. That means you can superimpose virtual objects on the real world very accurately, and aid the user by helping them more quickly identify tools, parts and other equipment.

Spatial barcode scanning: retrieve information from most barcode (and QR code) formats for use in your app. This is great for rapidly scanning equipment or inventory to take quick action.

Increased platform control: Apple now allows enterprise developers to have more granular control over important APIs, and push the system performance higher (with an obvious trade-off around battery life). Imagine tracking a very large number of parts for a repair technician, highlighting the correct next part with step-by-step instructions.

Glue Pizza

As with all new technology, it’s important to understand it’s limitations. This is especially true for work tools. As we learned with mobile, these cannot be gimmicks, and must add real value to a workflow or process.

There are three major drawbacks to be aware of with Apple Vision Pro in enterprise today:

1 – Cost: $3500 USD is an expensive computer. The use-case outcomes must justify the hardware investment. There are much cheaper VR-only devices out there.

2 – Personalized fit: Apple designed Vision Pro to be worn comfortably for long periods of time. As a result, the device is highly calibrated to an individual user in both hardware and software. You also cannot wear your glasses with Vision Pro, and must have prescription lenses for any vision needs. It is difficult to rapidly share the device between users (though it is possible over longer work periods).

3 – Safety: pass-through video on Vision Pro is a borderline miracle, and can be used for all kinds of things, but if the device shuts down for any reason, the user is blind.

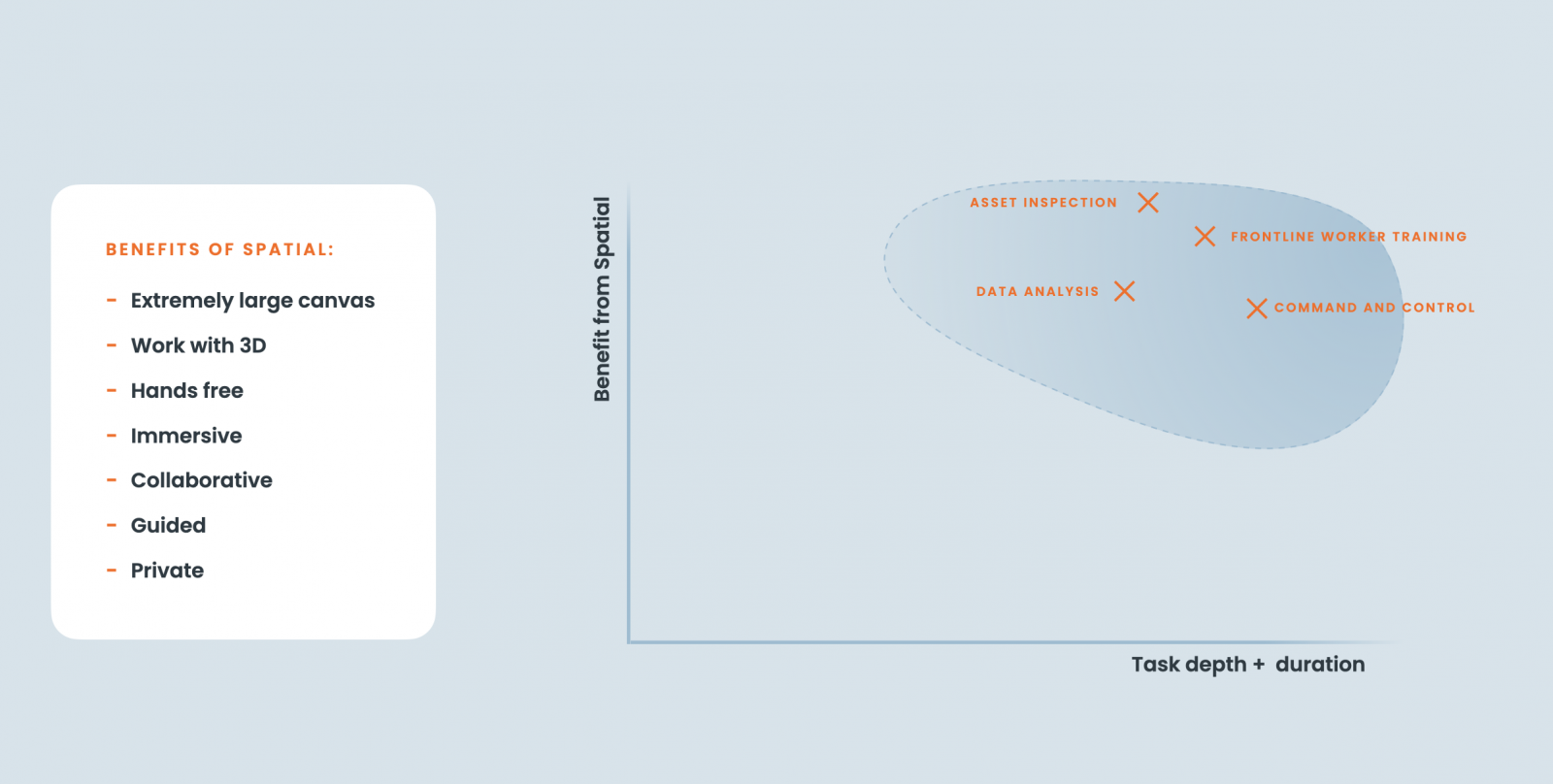

Spatial Computing adds acute value for business but does so in quite narrow ways (for now at least). The benefits of Apple Vision Pro are most obvious when the user:

– needs an extremely large canvas

– is working with 3D objects

– needs to have their hands free

– is more productive with an immersive experience

– should be guided with specific instructions

– needs complete privacy for confidential information

– wants to do all of the above while collaborating with remote participants

This is quite a bit to think about. We use the chart below to help you distill down and priories use-cases based on the real-world considerations. After we go through this exercise with clients, we then check each use-case for technical and business feasibility (ROI).

Ready for Enterprise

You almost certainly have Apple devices deployed in your organization. Vision Pro has a distinct advantage here: the same security, privacy, deployment, and management tools and policies can apply to it right away — you deploy Vision Pro just like you deploy iPad.

Spatial computing, has an additional security and privacy benefits: no one can see what the user can see — the ultimate privacy screen. This means people can work with sensitive information in any setting (like an airport lounge).

visionOS connects to your enterprise systems like a mobile device. Any data that can be exposed via modern APIs can be easily used in a visionOS app. Most major enterprise systems support mobile today (SAP, Salesforce, Workday), and Trifork can help you design and deploy a modern architecture for data that is contained in legacy systems.

visionOS apps are built just like iOS apps, with one important exception: 3D models and environments. Working with these kinds of assets is normal for games, but not enterprise apps. Most organizations need to augment their team’s skills in this area.

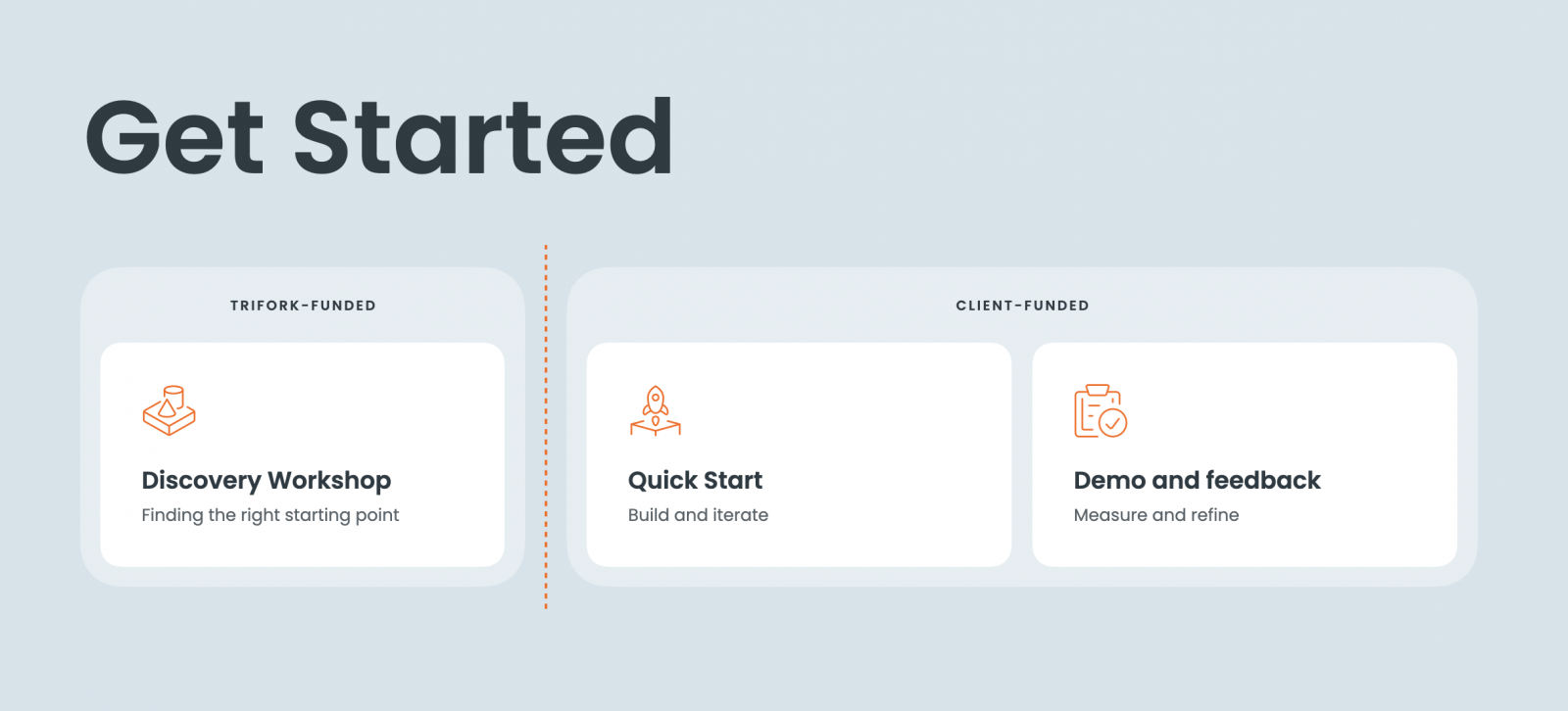

Get Started

We recommend following these guidelines

- Ensure your use-case has the right characteristics to benefit from spatial computing (see the section above).

- Staff your team with both Apple and 3D graphics developers, designers and artists

- Intimately include the end-users in the design and testing process

- Start quickly, test daily, gather feedback, measure outcomes, and fine-tune rapidly

- Follow visionOS design best practices to minimize user training

- Measure and fit end-users, and ensure they have prescription lenses

- Deploy to a control group, and prepare to expand with the business need Trifork.

If you’d like to collaborate with us on any of the points above, we are here to help. We like follow an engagement model like this:

Spatial computing can fundamentally change your employee productivity and customer experience for the better. These capabilities where not available before now. This is the beginning of something very significant.

When you start with the right problems — those that can uniquely be solved by spatial computing — you can very quickly build solutions, with mature, trusted tools from Apple, that can be deployed in the hands of users rapidly. Trifork has the depth of skills and experience to help you do this the right way.

Let us know how we can help.

Read more on: https://trifork.com/apple-vision/

Latest articles & Updates