AWS Account Factory for Terraform: Get inside the black box by thinking outside the box

AFT or Account Factory for Terraform is part of AWS Control Tower. It allows accounts to be requested and vended via a GitOps model, providing customisations as part of the process.

The intended use-case is to bootstrap accounts with account-wide config and services, but not to deploy or provision applications. Some examples might be SSO IAM roles, service accounts, VPCs, networking to transit gateways, VPC Endpoints etc.. In other words, all the things that need to be done whenever an account is created or when organisation-wide changes are applied.

The Challenge

In my experience, it is a very useful tool that helps to streamline the process of account procurement and deployment, however, it has a few limitations and short-comings.

First of all, AFT is not exactly a “first-class citizen”. There is no AFT section in the AWS Console, there are no dashboards, nor any specific metrics or alerts as such either.

Second, and more importantly from a platform engineering perspective, it is very difficult to test updates to AFT code. The main reason for this is that it is not possible to apply from a feature or integration branch, only the default branch. This means that any defects are often only apparent once the code is live, which means it is affecting all new accounts and any existing accounts.

A scenario which was particularly common was to find that a change which worked on freshly vended accounts, failed on existing accounts or vice versa with no easy way of observing what was going on.

This all results in AFT feeling like a bit of a black-box, where we cannot test outside of it, and it is difficult to observe what is going on inside.

What is needed is a method of hooking into the Terraform state which is already managed by AFT, to validate changes to a fresh or an existing account, and to diagnose issues in any accounts which appear to be failing.

Understanding how it works

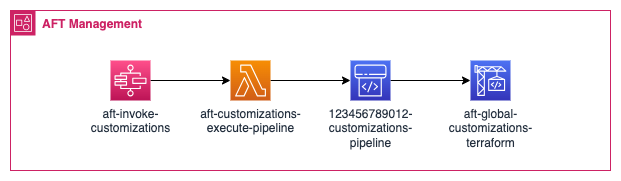

The first step is to understand what AFT does under the hood in a bit more detail. At a high level, the process is as follows:

“Invoke AFT, determine which account(s) are to be acted upon, configure a CodePipeline job for each account, run the pipeline which consists of one or more CodeBuild jobs”

Based on the input parameters, this then determines which accounts should be acted upon and passes this to the aft-customizations-execute-pipeline Lambda which creates and runs a CodePipeline job for each account with the appropriate customization steps for that account.

Typically this would be some “global” customizations, and then customizations specific to an account type (e.g. accounts in different OUs for different products etc.).

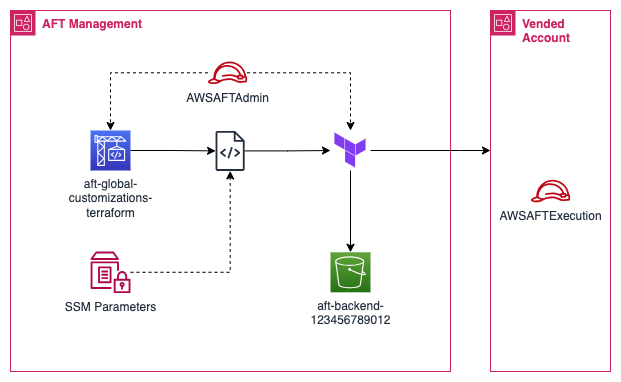

The really interesting part all happens within the CodeBuild jobs which is where AFT actually runs Terraform. The process within each CodeBuild job is as follows:

“Assume AWSAFTAdmin IAM role, collect backend config details from SSM parameters, generate backend and provider config files, execute Terraform, saving state in an S3 bucket”

The IAM roles used are documented here by AWS. By looking at the trust policy of AWSAFTAdmin, we can see it can be assumed by any privileged user or role within the account.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::012345678901:root"

},

"Action": "sts:AssumeRole"

}

]

}This is important, as we will need to be able to assume a role within the AFT Management account, which itself can assume the AWSAFTAdmin role. This role executes Terraform which in turn assumes the AWSAFTExecution role in the Vended Account to action any changes.

The other key point of interest here is the SSM parameters, and the files which they are used to configure. This is how AFT sets up Terraform to target the correct state file and the IAM role it needs to assume in the Vended Account.

If you examine any customization repo such as this sample, you will see that there are two Jinja2 template files, backend.jinja and aft-providers.jinja. The CodeBuild job pulls the necessary data from SSM parameters, and then uses these Jinja2 templates to generate backend.tf and aft-providers.tf files from the respective templates.

Taking a closer look at the log output of one of the CodeBuild jobs reveals this in action

if [ $TF_DISTRIBUTION = "oss" ]; then

TF_BACKEND_REGION=$(aws ssm get-parameter --name "/aft/config/oss-backend/primary-region" --query "Parameter.Value" --output text)

TF_KMS_KEY_ID=$(aws ssm get-parameter --name "/aft/config/oss-backend/kms-key-id" --query "Parameter.Value" --output text)

TF_DDB_TABLE=$(aws ssm get-parameter --name "/aft/config/oss-backend/table-id" --query "Parameter.Value" --output text)

TF_S3_BUCKET=$(aws ssm get-parameter --name "/aft/config/oss-backend/bucket-id" --query "Parameter.Value" --output text)

TF_S3_KEY=$VENDED_ACCOUNT_ID-aft-global-customizations/terraform.tfstate

cd /tmp

echo "Installing Terraform"

curl -q -o terraform_${TF_VERSION}_linux_amd64.zip https://releases.hashicorp.com/terraform/${TF_VERSION}/terraform_${TF_VERSION}_linux_amd64.zip

mkdir -p /opt/aft/bin

unzip -q -o terraform_${TF_VERSION}_linux_amd64.zip

mv terraform /opt/aft/bin

/opt/aft/bin/terraform -no-color --version

cd $DEFAULT_PATH/$CUSTOMIZATION/terraform

for f in *.jinja;

do jinja2 $f

-D timestamp="$TIMESTAMP"

-D tf_distribution_type=$TF_DISTRIBUTION

-D provider_region=$CT_MGMT_REGION

-D region=$TF_BACKEND_REGION

-D aft_admin_role_arn=$AFT_EXEC_ROLE_ARN

-D target_admin_role_arn=$VENDED_EXEC_ROLE_ARN

-D bucket=$TF_S3_BUCKET

-D key=$TF_S3_KEY

-D dynamodb_table=$TF_DDB_TABLE

-D kms_key_id=$TF_KMS_KEY_ID

>> ./$(basename $f .jinja).tf;

done

for f in *.tf; do echo "n n"; echo $f; cat $f; done

cd $DEFAULT_PATH/$CUSTOMIZATION/terraform

export AWS_PROFILE=aft-management-admin

/opt/aft/bin/terraform init -no-color

/opt/aft/bin/terraform apply -no-color --auto-approve

...This excerpt is for the open source (OSS) version of Terraform, however, AFT also supports Terraform Cloud and Enterprise. The TFC/TFE implementation will be very similar, just using slightly different input variables for the template, however, for the purposes of this article, we will focus on OSS.

Building a solution

After examining the process from the CodeBuild job, it is clear what we now need to do in order to hook into an AFT managed Terraform state.

- Assume AWSAFTAdmin IAM Role

- Extract details from SSM parameters and construct the required inputs

- Generate backend.tf and aft-providers.tf files

- Ensure we have the correct Terraform version

- Run terraform init

In order to achieve all this, we can construct a simple script, but to make things easier we can employ a couple of helpful utilities to help us too. We need a way to easily switch IAM roles, for which we can use Granted, which reads AWS SSO/IAM profiles and allows easy switching. We also need to ensure we run the correct Terraform version, for which we can use TFSwitch, which will download and use the desired Terraform version.

The example script below uses these two tools, however, you can modify it slightly to use other tools if required.

#!/usr/bin/env bash

# aft-bootstrap.sh

# Copyright: 2024 OpenCredo Ltd.

# License: MIT https://opensource.org/license/mit

# Check that the PWD contains AFT Terraform files

if ! [[ -f ./backend.jinja && -f ./aft-providers.jinja ]]

then

echo "ERROR: Not in an AFT Terraform directory or files missing"

return 1

fi

# Input account ID

VENDED_ACCOUNT_ID=${1}

echo "Targeting account: ${VENDED_ACCOUNT_ID}"

## Configuration values

# AFT Management account ID - this must match your AFT Management account ID

AFT_ACCOUNT_ID=123456789012

# IAM SSO Role to assume - these must match your SSO/IAM profile in Granted

# e.g. "aft-management/SSO-Administration"

ASSUME_AFT_MGMT_ACCOUNT="aft-management"

ASSUME_IAM_ROLE="SSO-Administrator"

# AWS Region which Control Tower runs in - this must match your CT setup

CT_MGMT_REGION="eu-west-1"

# Default config values - these should not need to be changed

TIMESTAMP=$(date '+%Y-%m-%d %H:%M:%S')

TF_DISTRIBUTION="oss"

AFT_EXEC_ROLE_ARN="arn:aws:iam::${AFT_ACCOUNT_ID}:role/AWSAFTExecution"

VENDED_EXEC_ROLE_ARN="arn:aws:iam::${VENDED_ACCOUNT_ID}:role/AWSAFTExecution"

# Assume privileged role in AFT Management Account

echo "Assuming ${ASSUME_IAM_ROLE} in ${ASSUME_AFT_MGMT_ACCOUNT}"

assume $ASSUME_AFT_MGMT_ACCOUNT/$ASSUME_IAM_ROLE

# Config derived from AFT Management Account SSM Parameters

echo "Pulling config from AFT Management Account"

TF_VERSION=$(aws ssm get-parameter --name "/aft/config/terraform/version" --query "Parameter.Value" --output text)

TF_BACKEND_REGION=$(aws ssm get-parameter --name "/aft/config/oss-backend/primary-region" --query "Parameter.Value" --output text)

TF_KMS_KEY_ID=$(aws ssm get-parameter --name "/aft/config/oss-backend/kms-key-id" --query "Parameter.Value" --output text)

TF_DDB_TABLE=$(aws ssm get-parameter --name "/aft/config/oss-backend/table-id" --query "Parameter.Value" --output text)

TF_S3_BUCKET=$(aws ssm get-parameter --name "/aft/config/oss-backend/bucket-id" --query "Parameter.Value" --output text)

TF_S3_KEY="${VENDED_ACCOUNT_ID}-aft-account-customizations/terraform.tfstate"

# Set Terraform version

echo "Using Terraform ${TF_VERSION}"

tfswitch "${TF_VERSION}"

# Generate Terraform backend and provider config

echo "Generating Terraform backend and provider config"

for f in *.jinja;

do jinja2 "$f"

-D timestamp="$TIMESTAMP"

-D tf_distribution_type=$TF_DISTRIBUTION

-D provider_region=$CT_MGMT_REGION

-D region="$TF_BACKEND_REGION"

-D aft_admin_role_arn=$AFT_EXEC_ROLE_ARN

-D target_admin_role_arn="$VENDED_EXEC_ROLE_ARN"

-D bucket="$TF_S3_BUCKET"

-D key="$TF_S3_KEY"

-D dynamodb_table="$TF_DDB_TABLE"

-D kms_key_id="$TF_KMS_KEY_ID"

> "./$(basename "$f" .jinja).tf";

done

# Assuming AFTAdmin role

echo "Assuming AFTAdmin role"

credentials=$(aws sts assume-role

--role-arn "arn:aws:iam::$(aws sts get-caller-identity --query "Account" --output text ):role/AWSAFTAdmin"

--role-session-name AWSAFT-Session

--query Credentials )

unset AWS_PROFILE

AWS_ACCESS_KEY_ID=$(echo "$credentials" | jq -r '.AccessKeyId')

AWS_SECRET_ACCESS_KEY=$(echo "$credentials" | jq -r '.SecretAccessKey')

AWS_SESSION_TOKEN=$(echo "$credentials" | jq -r '.SessionToken')

export AWS_ACCESS_KEY_ID

export AWS_SECRET_ACCESS_KEY

export AWS_SESSION_TOKEN

unset credentials

# AFT should be set up now

echo "AFT config and credentials set up, initialising Terraform"

terraform initThis script takes a single input, the AWS Account ID of the target AFT vended account, and can be run with ./aft-bootstrap.sh <account_id> .

After running this script, you should see that Terraform has initialised and you can now inspect the state, run plans or even apply changes and fixes (e.g. move or import resources in the state etc.).

This can be used ad-hoc by engineers, but it could also be integrated into some form of CICD process to validate against reference accounts before merging changes to the main branch.

Conclusion

AFT is currently a bit of a black-box, which makes continuous development and testing a challenge. However, with a little outside the box thinking it is entirely possible to get inside the black-box and figure out what exactly is going on to help assist in platform development, debugging, and CICD processes.

All that is needed is a privileged role in the AFT Management account, and a method of pulling all the necessary details together, as we have seen in the example above.

This blog is written exclusively by the OpenCredo team. We do not accept external contributions.