Google Cloud Spanner: our first impressions

Since we are currently in the process of evaluating various distributed “NewSQL” databases, we felt it was worth adding Cloud Spanner to the list. It is important to point out that at the moment the service is still in “beta” phase and there are some restrictions (for example you cannot have a multi-region Spanner instance), but functionally it should be close enough to the final version that it’s worth taking a look.

What is it exactly?

It is somewhat difficult to describe Google Spanner with a single label – it does not really fit into existing categories. Instead, let’s discuss it from several aspects:

Is it a distributed database?

Yes. Each Spanner instance is running on multiple appropriately sized nodes, managed by Google’s Cloud service. This provides scalability in terms of data size and transactional throughput and adds resilience to the system.

Is it a relational database?

Not fully. Although Spanner’s data model is fundamentally like any other relational database’s – there are pre-defined tuples of data that can be stored in relations (tables) and queried – it is lacking in terms of constraints.Primary keys can (in fact, must) be defined, and uniqueness can be enforced; however there is no concept of a FOREIGN KEY to its full extent (we are going to discuss INTERLEAVE shortly – it is not an exact match).From relational databases we expect the capability to enforce the integrity of the data, to ensure that it matches pre-defined constraints. Spanner’s capabilities are somewhat limited from this perspective.

Is it an ACID database?

Yes. In fact Google’s transactional guarantees are very strict, probably stricter than those of most commonly used RDBMS. Additionally, transactional timestamps match “wall clock” time, thanks to TrueTime technology.

Is it a SQL database?

Mostly yes. Although currently only the SELECT statement is supported – any mutating operation must be executed through Spanner’s API (a REST API with client libraries available for most major languages).

Is it CP or AP?

CP, effectively CA. Due to the distributed nature of Spanner, this is a valid question to ask. The relevant whitepaper states that Spanner is a CP system fundamentally:

Does this mean that Spanner is a CA system as defined by CAP? The short answer is “no” technically, but “yes” in effect and its users can and do assume CA.The purist answer is “no” because partitions can happen and in fact have happened at Google, and during (some) partitions, Spanner chooses C and forfeits A

However in Google’s infrastructure network partitions are so rare that effectively it can be treated as CA, users can assume 99.999% availability. In fact during Google’s history of using Spanner, most problems were not network related.

Setting up the infrastructure

Setup is trivial, there are almost no options that can be specified: you have to decide which region the Spanner instance should be placed in, and the number of nodes, and that’s it.Access control integrates with Google Cloud’s IAM mechanism. There is actually no fine-grained permission model though; you can have read/write permissions on an entire database but not on individual tables.An example command to set up an instance and a database from Google Cloud’s CLI looks like this:

gcloud beta spanner instances create spanner-test --config=regional-europe-west1

--description="Test Spanner" --nodes=3

gcloud beta spanner databases create test --instance=spanner-testTooling

The operational tooling supplied is exclusively Google Cloud’s Web UI or CLI. These give you the standard operations you’d expect: creating and deleting instances, databases, executing schema manipulation, do ad-hoc querying, monitoring key metrics, etc… Operations are accessible through Google Cloud’s REST API and in client libraries.Ad-hoc querying can be done via the Google Clout Web UI, but there is no officially supported standalone client of equivalent functionality. The gcloud CLI has a similar facility but it is a bit long-winded to use comfortably:gcloud beta spanner databases execute-sql test --instance spanner-test --sql=”"

Similarly, schema changes can be done through the web or via CLI, but it’s not particularly convenient:gcloud beta spanner databases ddl update test --instance spanner-test --ddl="”

The best way to create the schema in an automated fashion is probably to execute the necessary DDL on application startup time through the client API (it is also possible to interrogate the current schema).

The data model

There are two types of objects in Spanner: tables and indexes. These look mostly how you would expect from a traditional RDBMS. Here is an example definition of a table:

CREATE TABLE customer (

customer_id STRING(36) NOT NULL,

name STRING(50) NOT NULL

) PRIMARY KEY(customer_id);The only change is that the PRIMARY KEY clause is outside of the brackets.Data distribution is based on the PRIMARY KEY so care needs to be taken when choosing it in order to avoid cluster hotspots. Timestamps and monotonically increasing numbers are bad choices, while random information (e.g. user name) or UUIDs are good ones.As we noted previously, there is no way of defining a FOREIGN KEY relation between tables. However, Spanner has a concept that is somewhat similar at glance:

Interleaved tables

If you specify a table in the following way:

CREATE TABLE customer_order (

customer_id STRING(36) NOT NULL,

order_id STRING(36) NOT NULL,

created_at timestamp NOT NULL

) PRIMARY KEY(customer_id, order_id),

INTERLEAVE IN PARENT customer ON DELETE CASCADE;As a result the customer_order table’s rows will be physically co-located – interleaved with the parent table’s respective rows. There are a few restrictions on this:The “child” table must have a PRIMARY KEY that contains the parent’s primary keys first (column names must match)

- Inserting into the child table is only possible if the parent has a matching row (this is the closest construct to a

FOREIGN KEYconstraint that Spanner supports) - Rows from the parent table can only be deleted if

- There are no matching rows in the child table

ON DELETE CASCADEclause is specified which removes any associated data from the child table

- Parent-child relations can go to arbitrary depth

- But it’s recommended to keep the size of data that “belongs” under a single parent

PRIMARY KEYunder 2 GiB

Although there is a logical relationship introduced between the parent and child rows, the primary purpose of interleaving is to speed up certain queries – especially joins. This makes intuitive sense, since it’s directly altering the way the data is laid out on disk and making sure that the whole join query can be executed without accessing every node of the cluster.In this sense this model is actually much closer to Cassandra’s concept of a partition key with clustering columns (which is a very strong definition of a physical layout) than to a relational database’s foreign key constraint (which is fundamentally a logical relationship between data items).

Secondary indexes

The other construct available in the DDL of Spanner is a secondary index. Unsurprisingly you can create a secondary index in the following way:CREATE UNIQUE INDEX customer_by_name ON customer(name);

Please note the UNIQUE keyword – this index will enforce uniqueness of rows inserted into the customer table. Additionally, it is worth pointing out from the Spanner documentation that:

Cloud Spanner chooses an index automatically only in rare circumstances

This effectively means that having the index is not enough – when executing any queries you will explicitly have to specify (through the FORCE_INDEX directive) that you want to use one.

Programming model

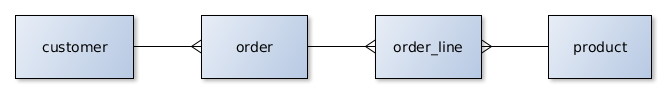

Google Cloud Spanner supports SQL’s SELECT statement only. That means you cannot execute any INSERT, UPDATE or DELETE statements and rely on common transaction control patterns. SELECT is fully supported (in fact with some extensions).As a result it is necessary to talk about the programming model – you are forced to use the APIs to do any data mutation. We are going to show some example code blocks to demonstrate how to interact with Spanner.Our example application manages customer orders in an online store. A Customer can have many Orders which in turn contain Items (order lines) of Products.

Querying

This is the code you would need to write to get a Customer record by its ID:

public Customer getCustomerById(final String id) {

final Struct result = client.singleUse().readRow(

"Customer",

Key.of(id),

Arrays.asList("customer_id", "name")

);

return new Customer(

UUID.fromString(result.getString("customer_id")),

result.getString("name")

);

}This is equivalent to the following:

public Customer getCustomerById(final String id) {

try (ResultSet rs = client.singleUse().executeQuery(

Statement.newBuilder("SELECT customer_id, name FROM customer WHERE customer_id = @id")

.bind("id").to(id)

.build()

)

) {

if (rs.next()) {

return new Customer(

UUID.fromString(rs.getString("customer_id")),

rs.getString("name")

);

} else {

return null;

}

}

}A couple of noteworthy observations:

- The

singleUse()call returns aReadContextobject which can be used to execute a single read statement. TheseReadContextsare not reusable. - The

ResultSetreturned when executing a query is not a JDBCResultSet, although there are similarities in behaviour ResultSetisAutoCloseable– it is always recommended to use it with a try block- Binding parameters to queries is only possible through the

Statement.Builderobject

Modifying data

Modification follows a similar pattern:

public Customer registerCustomer(final String name) {

final UUID id = UUID.randomUUID();

client.write(newArrayList(

Mutation.newInsertBuilder("customer")

.set("customer_id").to(id.toString())

.set("name").to(name)

.build()

));

return new Customer(id, name);

}You have to create a collection of Mutation objects – which can be done via the relevant builder objects – and then execute write(), which provides atomic execution of the mutations.

Using a secondary index

From the Java client there are two ways of specifying the secondary index to use; rely on the relevant API methods:

public Customer getCustomer(final String name) {

final Struct result = client.singleUse().readRowUsingIndex(

"Customer",

"Customer_by_name",

Key.of(name),

Arrays.asList("customer_id", "name")

);

return new Customer(

UUID.fromString(result.getString("customer_id")),

result.getString("name")

);

}Or use the FORCE_INDEX directive in the query language:

public Customer getCustomer(final String name) {

try (ResultSet rs = client.singleUse().executeQuery(

Statement.newBuilder(

"SELECT customer_id, name " +

"FROM customer@{FORCE_INDEX=customer_by_name} " +

"WHERE name = @name")"

.bind("name").to(name)

.build()

)

) {

if (rs.next()) {

return new Customer(

UUID.fromString(rs.getString("customer_id")),

rs.getString("name")

);

} else {

return null;

}

}

}

Transactions

Spanner has ACID transactions which it achieves using a combination of pessimistic locks and 2-phase commit. In the API the transactional constructs are centered around the TransactionRunner and TransactionContext classes.Our example code shows the addition of a new order. This needs to create the Order object as well as add each line to the Order – checking that there is enough stock to fulfill the order and adjusting inventory accordingly:

public Order placeOrder(final String customerName, final List items) {

return client.readWriteTransaction().run(transaction -> {

final Customer customer = customerService.getCustomer(transaction, customerName);

final Order order = createOrder(transaction, customer.getId());

items.stream().flatMap(item -> {

final Product product = productService.getProduct(transaction, item.getProductName());

final long requestedQty = item.getQty();

long remainingQty = product.getQty();

if (remainingQty < requestedQty) {

throw new InsufficienctStockException("Not enough product " + product.getName());

}

return Stream.of(

newUpdateBuilder("product")

.set("qty").to(remainingQty - requestedQty)

.set("product_id").to(product.getId().toString())

.build(),

newInsertBuilder("order_line")

.set("order_id").to(order.getId().toString())

.set("product_id").to(product.getId().toString())

.set("qty").to(requestedQty)

.set("unit_price").to(product.getPrice().doubleValue())

.build()

);

}).forEach(transaction::buffer);

return order;

});

}The most important aspects of this:

- The callback function (implemented as a lambda with a “transaction” parameter) can be executed multiple times if the transaction fails to commit. Database operations in that case are not preserved from any previous execution of the block, so you don’t need to worry about that. However any additional side-effect that falls outside of Spanner needs to be idempotent. For example, code that posts a message to an external messaging system might be executed multiple times

- Mutations for the transaction are only executed at commit time – you can buffer them in the TransactionContext but can’t observe their effects within the transaction

- The

TransactionContextobject can be passed around as necessary, but it is the application writer’s responsibility to do so (there is no “implicit” context stashed away in aThreadLocal, or other magic of that kind) - Throwing an unchecked

RuntimeExceptionwithin the transactional block is valid – this will cause a rollback without a retry, and the exception will be propagated to the caller

Configuring the client

The client library is available on Maven Central:

com.google.cloud

google-cloud-spanner

0.9.2-beta

<!-- exclude an old version of Guava -->

com.google.guava

guava-jdk5

<!-- exclude an old version of Servlet API -->

javax.servlet

servlet-apiNote the exclusions which we had to add to make our client code work – the Spanner library pulls in a large number of dependencies.To get your client talk to the database you need to configure access. This is relatively straightforward – the following simple Spring bean definitions result in a working client application:

@Bean

public SpannerOptions spannerOptions() {

return SpannerOptions.newBuilder().build();

}

@Autowired

@Bean

public Spanner spannerService(SpannerOptions options) {

return options.getService();

}

@Autowired

@Bean

public DatabaseClient spannerClient(

SpannerOptions options,

Spanner spanner,

@Value("${spanner.instanceId}") String instanceId,

@Value("${spanner.databaseId}") String databaseId

) {

return spanner.getDatabaseClient(DatabaseId.of(options.getProjectId(), instanceId, databaseId));

}On SpannerOptions there is a high number of additional properties such as timeouts and pool sizes that can be set if necessary (we have tested with the defaults).Access credentials can also be set via SpannerOptions, but the recommended approach is different:On Google Cloud Compute instances you get an environment pre-configured that is recognised by the Spanner client, which will pick up the appropriate Service Account to access Spanner (the Service Account needs to have sufficient IAM permissions to do this)On any other client:

- Create appropriate IAM user

- Create and download the key JSON file

- Export GOOGLE_APPLICATION_CREDENTIALS pointing to the key file

- Run the client which automatically picks up this environment variable

Performance

We have not done a detailed load test on Google Cloud Spanner, only some exploratory testing. We mainly were interested in the performance of joins, so here are some basic figures. Please note that these tests may have been limited by our testing infrastructure’s throughput and are not necessarily the limits of what Spanner can achieve. Additionally further tuning of the client’s settings (connection pooling) could improve observed performance.For testing we have used a 7 node Spanner cluster in europe-west1 region and 2 n1-highcpu-4 compute instances in different zones of the same region to run our test code.

Simple reads

In this case we were just reading single Customer records by primary key to establish a baseline performance. We observed roughly 16,000 transaction per second throughput (suspected to be limited by the testing nodes) with mean latency around 25ms and 99 percentile around 40ms.

Reading with joins using an interleaved table

In this case we wanted to read all the Orders (not the individual items just the order data itself) belonging to a single Customer by customer ID. The following query was used:

select

c.customer_id, c.name, o.order_id, o.created_at

from

customer as c

inner join

customer_order as o on c.customer_id = o.customer_id

where

c.customer_id = @customer_idThe observed numbers are very close to what we measured for the simple read scenario: 16,000 transactions per second, mean latency around 25ms and 99 percentile around 40ms.

Reading with joins using a non-interleaved table and no indexes

The third case we repeated the same join query as before, but using non-interleaved tables. The performance drop was quite significant, in fact the queries were so slow that we would not recommend doing this in a production system: 14 transactions per second, mean latency around 14 seconds 99 percentile around 23 seconds.Note that join performance may be dependent on volume of data in the various tables.

Reading with joins using a non-interleaved table and an explicit index on the join column

The final case that we have tried is using a non-interleaved table and put an index on the column that we’re joining on. Additionally this required a change in the query itself, which looked like this:

select

c.customer_id, c.name, o.order_id, o.created_at

From

customer as c

inner join

customer_order@{FORCE_INDEX=order_by_customer} as o

on c.customer_id = o.customer_id

where

c.customer_id = @customer_idNotice the usage of the explicit FORCE_INDEXdirective. If we omit this, Spanner ignores the index on the join and reverts back to not using the index and is exactly the same as the previous case.The FORCE_INDEX directive resulted in greatly improved performance. Note that though it’s still somewhat less efficient than using INTERLEAVE, the index can provide very good levels of performance: 14,000 transactions per second, mean latency around 30ms and 99 percentile just under 100ms.

Conclusion

First of all, Cloud Spanner is still declared beta, meaning you should at the very least be careful when adopting. However Google has been using it internally for years, which implies that at its core it’s fairly mature (and indeed we had the impression of a mature product when using it).What we really liked about it was the very strong ACID guarantees on transactions – if we were to single out the most impressive feature, this certainly would be it. The “relational” model given is nice, but it’s not “pure” as you’d expect a single-server RDBMS to be. We think that the compromises that Spanner has made are sensible ones – supporting arbitrary queries efficiently in a distributed system is difficult, and every attempted solution involves tradeoffs of some kind. Instead, Spanner does not shield you completely from the physical layout of the data and allows you to make the tradeoffs that make most sense for your use-case.

The other side of this point is that you have to pay attention to data modeling and have to understand not only the logical structure of the data, but the commonly used access patterns, too – at schema design time.Interestingly some strategies from the NoSQL world (especially from Cassandra) can be really handy here – each Cassandra table with complex primary keys can easily be translated to a tree of Spanner tables. However, Spanner takes this model further, since several levels of parent-child table relationships are permitted.

And finally, some of the bits we didn’t like: Spanner is not compatible with any other database technology out there. It has a completely proprietary API that you need to learn and adapt your code to. Additionally there is no fully functional bridge to common database APIs such as JDBC. The lack of tooling (e.g. a SQL “console” to explore data and experiment) makes day-to-day usage a bit unpleasant. Also, if you adapt Spanner you are locking yourself into Google Cloud – there is no way you would be able to run this database anywhere else but on Google’s infrastructure, and your code ceases to be portable.To summarise:

Pros:

- Strongly ACID semantics

- Distributed, fault-tolerant system

- High performance, scales horizontally

- Fully hosted and managed solution that “just works”

- “Close enough” relational data model

Cons:

- Non-standard APIs

- Relative lack of tooling support

- Not “pure” RDBMS – limited ways to enforce referential integrity

- Data modeling has to be approached differently

- Vendor lock-in

Should I use it?

If you are already a Google Cloud user and happy with it, yes. For any greenfield project, it’s worth considering if you have requirements for a very highly available and scalable datastore and you are not happy with the compromises you have to make with a NoSQL technology.

Migrating an existing RDBMS-based application to Cloud Spanner is a significant exercise.However, we don’t think it’s the ultimate database that solves every data storage problem. It’s not far away, it certainly covers a lot of use-cases and solves many problems, but does not give you the same level of flexibility as a traditional RDBMS would.

The bottom line is that we recommend some level of caution if you are thinking of adapting Google Cloud Spanner. From a purely technical perspective, it’s a great product, but it may not be what you need exactly. Understand your use-case and what you need from your database, play with the API a bit and decide whether you are happy or not with the lock-in; then make the decision to adopt it or not.

Update

If you like Google Spanner, but also want to run it on your own hardware take a look at CockroachDB – a new, open source, distributed SQL database inspired by Google Spanner. Here are our first impressions: https://opencredo.com/cockroachdb-first-impressions

This blog is written exclusively by the OpenCredo team. We do not accept external contributions.